Deploy AI models efficiently across on-prem, cloud, or hybrid environments with automated setup, versioning, and scaling. Helping teams to reduce manual workload and configuration errors.

Automate and Accelerate AI Workflows at Scale

We’re transforming fragmented AI pipelines into streamlined, scalable, and fully reproducible workflows to empower faster innovation and more efficient experimentation at scale. Orchestrate AI is the ideal solution for IT operations teams, data scientists, generative AI creators, and research groups managing complex AI workloads

Simple and Smart Orchestration

From model deployment and infrastructure optimization, to job management and real-time monitoring, we’ve got you covered. With intelligent scheduling, resource-aware execution, and support for frameworks like ComfyUI, LangChain, Topaz, Ollama, Stable Diffusion, KoboldCPP, AutoGPT and more, Orchestrate AI maximizes performance while streamlining workflows for IT teams, data scientists, and AI creators.

Built For Data Centers, Content Creators, and AI Labs

RAVEL Orchestrate AI Highlights

Easy Model Deployment Across Hybrid Infrastructure

Optimize GPU/CPU Resource Utilization

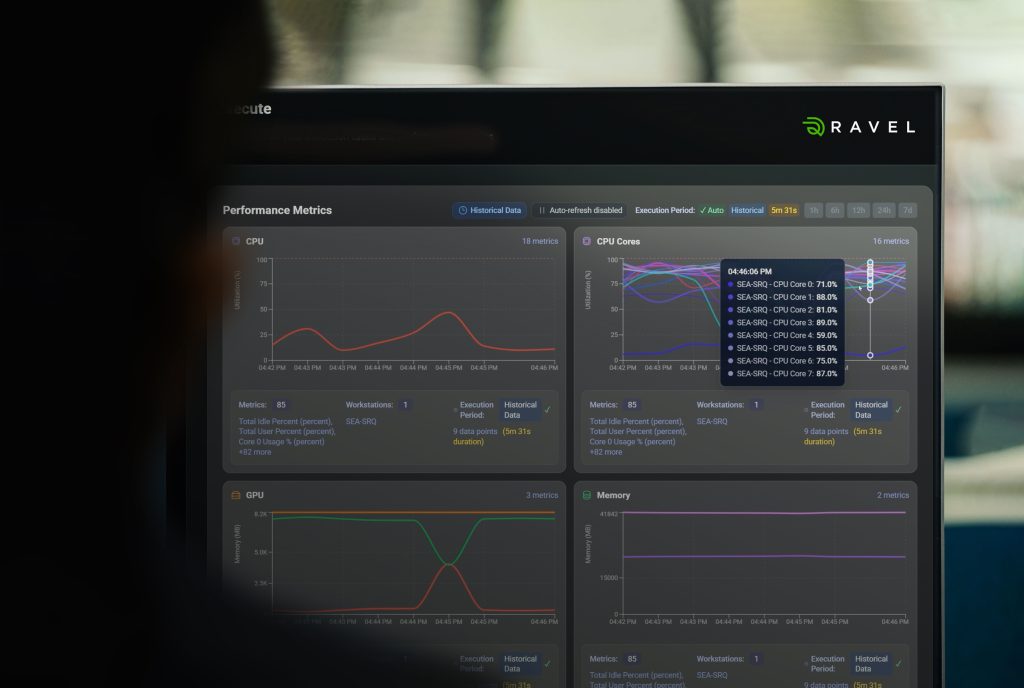

Use intelligent, resource-aware job scheduling to ensure AI workloads make the best use of available compute. Save on energy and costs by minimizing idle time and maximizing throughput.

Run and Manage Generative AI Workflows

Enable artists and creators to run large generative models (e.g., using ComfyUI or Stable Diffusion) with optimized resource allocation, job queuing, and monitoring. The best bit, no infrastructure expertise required.

Accelerate AI Research and Experimentation

Support data scientists and research teams in launching multiple model training and evaluation jobs in parallel with consistent, reproducible environments. Enabling organizations to get an edge over the competition by speeding up iteration cycles and taking products to market faster.

Centralized Job Management for Distributed Teams

Allow multiple users to queue, manage, and monitor AI jobs from a unified interface. Ideal for collaborative environments where many team members share compute infrastructure across multiple locations.

Infrastructure-Aware Scheduling and Load Balancing

Automatically match workloads to the most appropriate hardware (e.g., selecting between A100s vs. CPUs) based on task requirements. Designed to improve performance and reduce bottlenecks.

Multi-Tenant Infrastructure Management

Support multiple projects or users with isolated workflows and permissions. Perfect for enterprise R&D labs, academic institutions, or shared innovation centers

Smarter AI Management Starts Here!

Supermicro x RAVEL Turnkey AI Workload Solution is available now

Built for teams looking to scale their AI capabilities without complexity. This plug-and-play solution combines Supermicro’s cutting-edge hardware—powered by AMD Ryzen Threadripper PRO CPUs and NVIDIA RTX 6000 Blackwell GPUs—with the intelligent orchestration capabilities of RAVEL Orchestrate AI.

READ MORE ABOUT THIS SOLUTION —> HERE